Publications

This page is automatically generated from the White Rose database using name-string queries. It has known inaccuracies - please contact the authors directly to confirm data.

Unsupervised image translation (UIT) studies the mapping between two image domains. Since such mappings are under-constrained, existing research has pursued various desirable properties such as distributional matching or two-way consistency. In this paper, we re-examine UIT from a new perspective: distributional semantics consistency, based on the observation that data variations contain semantics, e.g., shoes varying in colors. Further, the semantics can be multi-dimensional, e.g., shoes also varying in style, functionality, etc. Given two image domains, matching these semantic dimensions during UIT will produce mappings with explicable correspondences, which has not been investigated previously. We propose distributional semantics mapping (DSM), the first UIT method which explicitly matches semantics between two domains. We show that distributional semantics has been rarely considered within and beyond UIT, even though it is a common problem in deep learning. We evaluate DSM on several benchmark datasets, demonstrating its general ability to capture distributional semantics. Extensive comparisons show that DSM not only produces explicable mappings, but also improves image quality in general.

@article{wrro186930,

volume = {9},

number = {3},

month = {September},

author = {Z Peng and H Wang and Y Yang and Y Yang and T Shao},

note = {{\copyright} The Author(s) 2023. This article is licensed under a Creative

Commons Attribution 4.0 International License, which

permits use, sharing, adaptation, distribution and reproduction

in any medium or format, as long as you give appropriate

credit to the original author(s) and the source, provide a link

to the Creative Commons licence, and indicate if changes

were made.

The images or other third party material in this article are

included in the article?s Creative Commons licence, unless

indicated otherwise in a credit line to the material. If material

is not included in the article?s Creative Commons licence and

your intended use is not permitted by statutory regulation or

exceeds the permitted use, you will need to obtain permission

directly from the copyright holder.

To view a copy of this licence, visit http://

creativecommons.org/licenses/by/4.0/.},

title = {Unsupervised image translation with distributional semantics awareness},

publisher = {SpringerOpen},

year = {2023},

journal = {Computational Visual Media},

pages = {619--631},

keywords = {generative adversarial networks (GANs);

manifold alignment; unsupervised learning;

image-to-image translation; distributional

semantics},

url = {https://eprints.whiterose.ac.uk/186930/},

abstract = {Unsupervised image translation (UIT) studies the mapping between two image domains. Since such mappings are under-constrained, existing research has pursued various desirable properties such as distributional matching or two-way consistency. In this paper, we re-examine UIT from a new perspective: distributional semantics consistency, based on the observation that data variations contain semantics, e.g., shoes varying in colors. Further, the semantics can be multi-dimensional, e.g., shoes also varying in style, functionality, etc. Given two image domains, matching these semantic dimensions during UIT will produce mappings with explicable correspondences, which has not been investigated previously. We propose distributional semantics mapping (DSM), the first UIT method which explicitly matches semantics between two domains. We show that distributional semantics has been rarely considered within and beyond UIT, even though it is a common problem in deep learning. We evaluate DSM on several benchmark datasets, demonstrating its general ability to capture distributional semantics. Extensive comparisons show that DSM not only produces explicable mappings, but also improves image quality in general.}

}Skeletal motions have been heavily relied upon for human activity recognition (HAR). Recently, a universal vulnerability of skeleton-based HAR has been identified across a variety of classifiers and data, calling for mitigation. To this end, we propose the first black-box defense method for skeleton-based HAR to our best knowledge. Our method is featured by full Bayesian treatments of the clean data, the adversaries and the classifier, leading to (1) a new Bayesian Energy-based formulation of robust discriminative classifiers, (2) a new adversary sampling scheme based on natural motion manifolds, and (3) a new post-train Bayesian strategy for black-box defense. We name our framework Bayesian Energy-based Adversarial Training or BEAT. BEAT is straightforward but elegant, which turns vulnerable black-box classifiers into robust ones without sacrificing accuracy. It demonstrates surprising and universal effectiveness across a wide range of skeletal HAR classifiers and datasets, under various attacks. Appendix and code are available.

@misc{wrro193975,

volume = {37},

number = {2},

month = {June},

author = {H Wang and Y Diao and Z Tan and G Guo},

booktitle = {The 37th AAAI conference on Aritificial Intelligence},

title = {Defending Black-box Skeleton-based Human Activity Classifiers},

address = {Washington, DC},

publisher = {AAAI},

year = {2023},

journal = {Proceedings of the AAAI Conference on Artificial Intelligence},

pages = {2546--2554},

keywords = {CV: Adversarial Attacks \& Robustness, CV: Motion \& Tracking},

url = {https://eprints.whiterose.ac.uk/193975/},

abstract = {Skeletal motions have been heavily relied upon for human activity recognition (HAR). Recently, a universal vulnerability of skeleton-based HAR has been identified across a variety of classifiers and data, calling for mitigation. To this end, we propose the first black-box defense method for skeleton-based HAR to our best knowledge. Our method is featured by full Bayesian treatments of the clean data, the adversaries and the classifier, leading to (1) a new Bayesian Energy-based formulation of robust discriminative classifiers, (2) a new adversary sampling scheme based on natural motion manifolds, and (3) a new post-train Bayesian strategy for black-box defense. We name our framework Bayesian Energy-based Adversarial Training or BEAT. BEAT is straightforward but elegant, which turns vulnerable black-box classifiers into robust ones without sacrificing accuracy. It demonstrates surprising and universal effectiveness across a wide range of skeletal HAR classifiers and datasets, under various attacks. Appendix and code are available.}

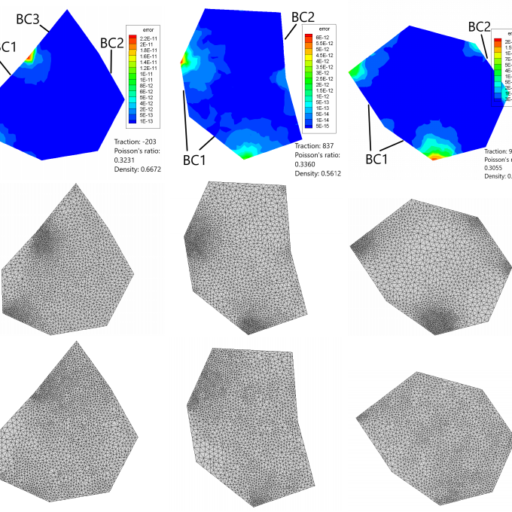

}We present a novel traffic trajectory editing method which uses spatio-temporal keyframes to control vehicles during the simulation to generate desired traffic trajectories. By taking self-motivation, path following and collision avoidance into account, the proposed force-based traffic simulation framework updates vehicle's motions in both the Frenet coordinates and the Cartesian coordinates. With the way-points from users, lane-level navigation can be generated by reference path planning. With a given keyframe, the coarse-to-fine optimization is proposed to efficiently generate the plausible trajectory which can satisfy the spatio-temporal constraints. At first, a directed state-time graph constructed along the reference path is used to search for a coarse-grained trajectory by mapping the keyframe as the goal. Then, using the information extracted from the coarse trajectory as initialization, adjoint-based optimization is applied to generate a finer trajectory with smooth motions based on our force-based simulation. We validate our method with extensive experiments.

@article{wrro191396,

volume = {41},

number = {7},

month = {March},

author = {Y Han and H Wang and X Jin},

note = {{\copyright} 2022 The Author(s) Computer Graphics Forum {\copyright} 2022 The Eurographics Association and John Wiley \& Sons Ltd. Published by John Wiley \& Sons Ltd. This is the peer reviewed version of the following article: Han, Y., Wang, H. and Jin, X. (2022), Spatio-temporal Keyframe Control of Traffic Simulation using Coarse-to-Fine Optimization. Computer Graphics Forum, 41: 541-552, which has been published in final form at https://doi.org/10.1111/cgf.14699. This article may be used for non-commercial purposes in accordance with Wiley Terms and Conditions for Use of Self-Archived Versions. This article may not be enhanced, enriched or otherwise transformed into a derivative work, without express permission from Wiley or by statutory rights under applicable legislation. Copyright notices must not be removed, obscured or modified. The article must be linked to Wiley?s version of record on Wiley Online Library and any embedding, framing or otherwise making available the article or pages thereof by third parties from platforms, services and websites other than Wiley Online Library must be prohibited.},

title = {Spatio-temporal Keyframe Control of Traffic Simulation using Coarse-to-Fine Optimization},

publisher = {Wiley},

year = {2023},

journal = {Computer Graphics Forum},

pages = {541--552},

keywords = {CCS Concepts; Computing methodologies {$\rightarrow$} Procedural animation; Interactive simulation},

url = {https://eprints.whiterose.ac.uk/191396/},

abstract = {We present a novel traffic trajectory editing method which uses spatio-temporal keyframes to control vehicles during the simulation to generate desired traffic trajectories. By taking self-motivation, path following and collision avoidance into account, the proposed force-based traffic simulation framework updates vehicle's motions in both the Frenet coordinates and the Cartesian coordinates. With the way-points from users, lane-level navigation can be generated by reference path planning. With a given keyframe, the coarse-to-fine optimization is proposed to efficiently generate the plausible trajectory which can satisfy the spatio-temporal constraints. At first, a directed state-time graph constructed along the reference path is used to search for a coarse-grained trajectory by mapping the keyframe as the goal. Then, using the information extracted from the coarse trajectory as initialization, adjoint-based optimization is applied to generate a finer trajectory with smooth motions based on our force-based simulation. We validate our method with extensive experiments.}

}Crowd simulation methods generally focus on high fidelity 2D trajectories but ignore detailed 3D body animation which is normally added in a post-processing step. We argue that this is an intrinsic flaw as detailed body motions affect the 2D trajectories, especially when interactions are present between characters, and characters and the environment. In practice, this requires labor-intensive post-processing, fitting individual character animations onto simulated trajectories where anybody interactions need to be manually specified. In this paper, we propose a new framework to integrate the modeling of crowd motions with character motions, to enable their mutual influence, so that crowd simulation also incorporates agent-agent and agent-environment interactions. The whole framework is based on a three-level hierarchical control structure to effectively control the scene at different scales efficiently and consistently. To facilitate control, each character is modeled as an agent governed by four modules: visual system, blackboard system, decision system, and animation system. The animation system of the agent model consists of two modes: a traditional Finite State Machine (FSM) animation mode, and a motion matching mode. So an agent not only retains the flexibility of FSMs, but also has the advantage of motion matching which adapts detailed body movements for interactions with other agents and the environment. Our method is universal and applicable to most interaction scenarios in various environments in crowd animation, which cannot be achieved by prior work. We validate the fluency and realism of the proposed method by extensive experiments and user studies.

@misc{wrro187875,

volume = {13443},

month = {January},

author = {X Yao and S Wang and W Sun and H Wang and Y Wang and X Jin},

series = {Lecture Notes in Computer Science},

note = {{\copyright} 2022 The Author(s). This is an author produced version of a conference paper published in Advances in Computer Graphics. Uploaded in accordance with the publisher's self-archiving policy.},

booktitle = {39th Computer Graphics International Conference, CGI 2022},

editor = {N Magnenat-Thalmann and J Zhang and J Kim and G Papagiannakis and B Sheng and D Thalmann and M Gavrilova},

title = {Crowd Simulation with Detailed Body Motion and Interaction},

publisher = {Springer},

year = {2023},

journal = {Advances in Computer Graphics},

pages = {227--238},

url = {https://eprints.whiterose.ac.uk/187875/},

abstract = {Crowd simulation methods generally focus on high fidelity 2D trajectories but ignore detailed 3D body animation which is normally added in a post-processing step. We argue that this is an intrinsic flaw as detailed body motions affect the 2D trajectories, especially when interactions are present between characters, and characters and the environment. In practice, this requires labor-intensive post-processing, fitting individual character animations onto simulated trajectories where anybody interactions need to be manually specified. In this paper, we propose a new framework to integrate the modeling of crowd motions with character motions, to enable their mutual influence, so that crowd simulation also incorporates agent-agent and agent-environment interactions. The whole framework is based on a three-level hierarchical control structure to effectively control the scene at different scales efficiently and consistently. To facilitate control, each character is modeled as an agent governed by four modules: visual system, blackboard system, decision system, and animation system. The animation system of the agent model consists of two modes: a traditional Finite State Machine (FSM) animation mode, and a motion matching mode. So an agent not only retains the flexibility of FSMs, but also has the advantage of motion matching which adapts detailed body movements for interactions with other agents and the environment. Our method is universal and applicable to most interaction scenarios in various environments in crowd animation, which cannot be achieved by prior work. We validate the fluency and realism of the proposed method by extensive experiments and user studies.}

}The use of good-quality data to inform decision making is entirely dependent on robust processes to ensure it is fit for purpose. Such processes vary between organisations, and between those tasked with designing and following them. In this paper we report on a survey of 53 data analysts from many industry sectors, 24 of whom also participated in in-depth interviews, about computational and visual methods for characterizing data and investigating data quality. The paper makes contributions in two key areas. The first is to data science fundamentals, because our lists of data profiling tasks and visualization techniques are more comprehensive than those published elsewhere. The second concerns the application question ?what does good profiling look like to those who routinely perform it?,? which we answer by highlighting the diversity of profiling tasks, unusual practice and exemplars of visualization, and recommendations about formalizing processes and creating rulebooks.

@article{wrro197083,

month = {January},

title = {Tasks and Visualizations Used for Data Profiling: A Survey and Interview Study},

author = {RA Ruddle and J Cheshire and SJ Fernstad},

publisher = {Institute of Electrical and Electronics Engineers},

year = {2023},

note = {{\copyright} 2023, IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.},

journal = {IEEE Transactions on Visualization and Computer Graphics},

keywords = {Data visualization , Task analysis , Data integrity , Interviews , Visualization , Bars , Industries},

url = {https://eprints.whiterose.ac.uk/197083/},

abstract = {The use of good-quality data to inform decision making is entirely dependent on robust processes to ensure it is fit for purpose. Such processes vary between organisations, and between those tasked with designing and following them. In this paper we report on a survey of 53 data analysts from many industry sectors, 24 of whom also participated in in-depth interviews, about computational and visual methods for characterizing data and investigating data quality. The paper makes contributions in two key areas. The first is to data science fundamentals, because our lists of data profiling tasks and visualization techniques are more comprehensive than those published elsewhere. The second concerns the application question ?what does good profiling look like to those who routinely perform it?,? which we answer by highlighting the diversity of profiling tasks, unusual practice and exemplars of visualization, and recommendations about formalizing processes and creating rulebooks.}

}Contour trees are a significant tool for data analysis as they capture both local and global variation. However, their utility has been limited by scalability, in particular for distributed computation and storage. We report a distributed data structure for storing the contour tree of a data set distributed on a cluster, based on a fan-in hierarchy, and an algorithm for computing it based on the boundary tree that represents only the superarcs of a contour tree that involve contours that cross boundaries between blocks. This allows us to limit the communication cost for contour tree computation to the complexity of the block boundaries rather than of the entire data set.

@misc{wrro190963,

booktitle = {2022 IEEE 12th Symposium on Large Data Analysis and Visualization (LDAV)},

month = {December},

title = {Distributed Hierarchical Contour Trees},

author = {HA Carr and O R{\"u}bel and GH Weber},

publisher = {IEEE},

year = {2022},

journal = {2022 IEEE 12th Symposium on Large Data Analysis and Visualization (LDAV)},

url = {https://eprints.whiterose.ac.uk/190963/},

abstract = {Contour trees are a significant tool for data analysis as they capture both local and global variation. However, their utility has been limited by scalability, in particular for distributed computation and storage. We report a distributed data structure for storing the contour tree of a data set distributed on a cluster, based on a fan-in hierarchy, and an algorithm for computing it based on the boundary tree that represents only the superarcs of a contour tree that involve contours that cross boundaries between blocks. This allows us to limit the communication cost for contour tree computation to the complexity of the block boundaries rather than of the entire data set.}

}Background Structured Medication Reviews (SMRs) are intended to help deliver the NHS Long Term Plan for medicines optimisation in people living with multiple long-term conditions and polypharmacy. It is challenging to gather the information needed for these reviews due to poor integration of health records across providers and there is little guidance on how to identify those patients most urgently requiring review. Objective To extract information from scattered clinical records on how health and medications change over time, apply interpretable artificial intelligence (AI) approaches to predict risks of poor outcomes and overlay this information on care records to inform SMRs. We will pilot this approach in primary care prescribing audit and feedback systems, and co-design future medicines optimisation decision support systems. Design DynAIRx will target potentially problematic polypharmacy in three key multimorbidity groups, namely, people with (a) mental and physical health problems, (b) four or more long-term conditions taking ten or more drugs and (c) older age and frailty. Structured clinical data will be drawn from integrated care records (general practice, hospital, and social care) covering an {$\sim$}11m population supplemented with Natural Language Processing (NLP) of unstructured clinical text. AI systems will be trained to identify patterns of conditions, medications, tests, and clinical contacts preceding adverse events in order to identify individuals who might benefit most from an SMR. Discussion By implementing and evaluating an AI-augmented visualisation of care records in an existing prescribing audit and feedback system we will create a learning system for medicines optimisation, co-designed throughout with end-users and patients.

@article{wrro197084,

volume = {12},

month = {December},

author = {LE Walker and AS Abuzour and D Bollegala and A Clegg and M Gabbay and A Griffiths and C Kullu and G Leeming and FS Mair and S Maskell and S Relton and RA Ruddle and E Shantsila and M Sperrin and T Van Staa and A Woodall and I Buchan},

note = {{\copyright} The Author(s) 2022. This is an open access article under the terms of the Creative Commons Attribution License (CC-BY 4.0), which permits unrestricted use, distribution and reproduction in any medium, provided the original work is properly cited.},

title = {The DynAIRx Project Protocol: Artificial Intelligence for dynamic prescribing optimisation and care integration in multimorbidity},

publisher = {SAGE Publications},

year = {2022},

journal = {Journal of Multimorbidity and Comorbidity},

pages = {1--14},

keywords = {multimorbidity, polypharmacy, frailty, mental health, artificial intelligence, medicines optimisation},

url = {https://eprints.whiterose.ac.uk/197084/},

abstract = {Background

Structured Medication Reviews (SMRs) are intended to help deliver the NHS Long Term Plan for medicines optimisation in people living with multiple long-term conditions and polypharmacy. It is challenging to gather the information needed for these reviews due to poor integration of health records across providers and there is little guidance on how to identify those patients most urgently requiring review.

Objective

To extract information from scattered clinical records on how health and medications change over time, apply interpretable artificial intelligence (AI) approaches to predict risks of poor outcomes and overlay this information on care records to inform SMRs. We will pilot this approach in primary care prescribing audit and feedback systems, and co-design future medicines optimisation decision support systems.

Design

DynAIRx will target potentially problematic polypharmacy in three key multimorbidity groups, namely, people with (a) mental and physical health problems, (b) four or more long-term conditions taking ten or more drugs and (c) older age and frailty. Structured clinical data will be drawn from integrated care records (general practice, hospital, and social care) covering an {$\sim$}11m population supplemented with Natural Language Processing (NLP) of unstructured clinical text. AI systems will be trained to identify patterns of conditions, medications, tests, and clinical contacts preceding adverse events in order to identify individuals who might benefit most from an SMR.

Discussion

By implementing and evaluating an AI-augmented visualisation of care records in an existing prescribing audit and feedback system we will create a learning system for medicines optimisation, co-designed throughout with end-users and patients.}

}Existing studies on formation control for unmanned aerial vehicles (UAV) have not considered encircling targets where an optimum coverage of the target is required at all times. Such coverage plays a critical role in many real-world applications such as tracking hostile UAVs. This paper proposes a new path planning approach called the Flux Guided (FG) method, which generates collision-free trajectories for multiple UAVs while maximising the coverage of target(s). Our method enables UAVs to track directly toward a target whilst maintaining maximum coverage. Furthermore, multiple scattered targets can be tracked by scaling the formation during flight. FG is highly scalable since it only requires communication between sub-set of UAVs on the open boundary of the formation?s surface. Experimental results further validate that FG generates UAV trajectories shorter than previous work and that trajectory planning for 9 leader/follower UAVs to surround a target in two different scenarios only requires 0.52 s and 0.88 s, respectively. The resulting trajectories are suitable for robotic controls after time-optimal parameterisation; we demonstrate this using a 3d dynamic particle system that tracks the desired trajectories using a PID controller.

@article{wrro187452,

volume = {205},

month = {November},

author = {J Hartley and HPH Shum and ESL Ho and H Wang and S Ramamoorthy},

note = {{\copyright} 2022 The Author(s). Published by Elsevier Ltd. This is an open access article under the terms of the Creative Commons Attribution 4.0 International (CC BY 4.0)},

title = {Formation control for UAVs using a Flux Guided approach},

publisher = {Elsevier},

journal = {Expert Systems with Applications},

year = {2022},

keywords = {Unmanned aerial vehicles; Multi-agent motion planning; Formation encirclement; Artificial harmonic field; Electric flux},

url = {https://eprints.whiterose.ac.uk/187452/},

abstract = {Existing studies on formation control for unmanned aerial vehicles (UAV) have not considered encircling targets where an optimum coverage of the target is required at all times. Such coverage plays a critical role in many real-world applications such as tracking hostile UAVs. This paper proposes a new path planning approach called the Flux Guided (FG) method, which generates collision-free trajectories for multiple UAVs while maximising the coverage of target(s). Our method enables UAVs to track directly toward a target whilst maintaining maximum coverage. Furthermore, multiple scattered targets can be tracked by scaling the formation during flight. FG is highly scalable since it only requires communication between sub-set of UAVs on the open boundary of the formation?s surface. Experimental results further validate that FG generates UAV trajectories shorter than previous work and that trajectory planning for 9 leader/follower UAVs to surround a target in two different scenarios only requires 0.52 s and 0.88 s, respectively. The resulting trajectories are suitable for robotic controls after time-optimal parameterisation; we demonstrate this using a 3d dynamic particle system that tracks the desired trajectories using a PID controller.}

}Trajectory prediction has been widely pursued in many fields, and many model-based and model-free methods have been explored. The former include rule-based, geometric or optimization-based models, and the latter are mainly comprised of deep learning approaches. In this paper, we propose a new method combining both methodologies based on a new Neural Differential Equation model. Our new model (Neural Social Physics or NSP) is a deep neural network within which we use an explicit physics model with learnable parameters. The explicit physics model serves as a strong inductive bias in modeling pedestrian behaviors, while the rest of the network provides a strong data-fitting capability in terms of system parameter estimation and dynamics stochasticity modeling. We compare NSP with 15 recent deep learning methods on 6 datasets and improve the state-of-the-art performance by 5.56\%?70\%. Besides, we show that NSP has better generalizability in predicting plausible trajectories in drastically different scenarios where the density is 2?5 times as high as the testing data. Finally, we show that the physics model in NSP can provide plausible explanations for pedestrian behaviors, as opposed to black-box deep learning. Code is available: https://github.com/realcrane/Human-Trajectory-Prediction-via-Neural-Social-Physics.

@misc{wrro189355,

volume = {13694},

month = {October},

author = {J Yue and D Manocha and H Wang},

note = {{\copyright} 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG. This is an author produced version of a conference paper published in Lecture Notes in Computer Science. Uploaded in accordance with the publisher's self-archiving policy.},

booktitle = {The European Conference on Computer Vision 2022},

title = {Human Trajectory Prediction via Neural Social Physics},

publisher = {Springer},

year = {2022},

journal = {Lecture Notes in Computer Science},

pages = {376--394},

keywords = {Human trajectory prediction; Neural differential equations},

url = {https://eprints.whiterose.ac.uk/189355/},

abstract = {Trajectory prediction has been widely pursued in many fields, and many model-based and model-free methods have been explored. The former include rule-based, geometric or optimization-based models, and the latter are mainly comprised of deep learning approaches. In this paper, we propose a new method combining both methodologies based on a new Neural Differential Equation model. Our new model (Neural Social Physics or NSP) is a deep neural network within which we use an explicit physics model with learnable parameters. The explicit physics model serves as a strong inductive bias in modeling pedestrian behaviors, while the rest of the network provides a strong data-fitting capability in terms of system parameter estimation and dynamics stochasticity modeling. We compare NSP with 15 recent deep learning methods on 6 datasets and improve the state-of-the-art performance by 5.56\%?70\%. Besides, we show that NSP has better generalizability in predicting plausible trajectories in drastically different scenarios where the density is 2?5 times as high as the testing data. Finally, we show that the physics model in NSP can provide plausible explanations for pedestrian behaviors, as opposed to black-box deep learning. Code is available: https://github.com/realcrane/Human-Trajectory-Prediction-via-Neural-Social-Physics.}

}We propose a novel method for generating high-resolution videos of talking-heads from speech audio and a single 'identity' image. Our method is based on a convolutional neural network model that incorporates a pre-trained StyleGAN generator. We model each frame as a point in the latent space of StyleGAN so that a video corresponds to a trajectory through the latent space. Training the network is in two stages. The first stage is to model trajectories in the latent space conditioned on speech utterances. To do this, we use an existing encoder to invert the generator, mapping from each video frame into the latent space. We train a recurrent neural network to map from speech utterances to displacements in the latent space of the image generator. These displacements are relative to the back-projection into the latent space of an identity image chosen from the individuals depicted in the training dataset. In the second stage, we improve the visual quality of the generated videos by tuning the image generator on a single image or a short video of any chosen identity. We evaluate our model on standard measures (PSNR, SSIM, FID and LMD) and show that it significantly outperforms recent state-of-the-art methods on one of two commonly used datasets and gives comparable performance on the other. Finally, we report on ablation experiments that validate the components of the model. The code and videos from experiments can be found at https://mohammedalghamdi.github.io/talking-heads-acm-mm/

@misc{wrro198157,

month = {October},

author = {MM Alghamdi and H Wang and AJ Bulpitt and DC Hogg},

note = {{\copyright} 2022 Copyright held by the owner/author(s). Publication rights licensed to ACM. This is an author produced version of an article published in Proceedings of the 30th ACM International Conference on Multimedia. Uploaded in accordance with the publisher's self-archiving policy.},

booktitle = {MM '22: The 30th ACM International Conference on Multimedia},

title = {Talking Head from Speech Audio using a Pre-trained Image Generator},

publisher = {ACM},

year = {2022},

journal = {Proceedings of the 30th ACM International Conference on Multimedia},

pages = {5228--5236},

url = {https://eprints.whiterose.ac.uk/198157/},

abstract = {We propose a novel method for generating high-resolution videos of talking-heads from speech audio and a single 'identity' image. Our method is based on a convolutional neural network model that incorporates a pre-trained StyleGAN generator. We model each frame as a point in the latent space of StyleGAN so that a video corresponds to a trajectory through the latent space. Training the network is in two stages. The first stage is to model trajectories in the latent space conditioned on speech utterances. To do this, we use an existing encoder to invert the generator, mapping from each video frame into the latent space. We train a recurrent neural network to map from speech utterances to displacements in the latent space of the image generator. These displacements are relative to the back-projection into the latent space of an identity image chosen from the individuals depicted in the training dataset. In the second stage, we improve the visual quality of the generated videos by tuning the image generator on a single image or a short video of any chosen identity. We evaluate our model on standard measures (PSNR, SSIM, FID and LMD) and show that it significantly outperforms recent state-of-the-art methods on one of two commonly used datasets and gives comparable performance on the other. Finally, we report on ablation experiments that validate the components of the model. The code and videos from experiments can be found at https://mohammedalghamdi.github.io/talking-heads-acm-mm/}

}Layout design is ubiquitous in many applications, e.g. architecture/urban planning, etc, which involves a lengthy iterative design process. Recently, deep learning has been leveraged to automatically generate layouts via image generation, showing a huge potential to free designers from laborious routines. While automatic generation can greatly boost productivity, designer input is undoubtedly crucial. An ideal AI-aided design tool should automate repetitive routines, and meanwhile accept human guidance and provide smart/proactive suggestions. However, the capability of involving humans into the loop has been largely ignored in existing methods which are mostly end-to-end approaches. To this end, we propose a new human-in-the-loop generative model, iPLAN, which is capable of automatically generating layouts, but also interacting with designers throughout the whole procedure, enabling humans and AI to co-evolve a sketchy idea gradually into the final design. iPLAN is evaluated on diverse datasets and compared with existing methods. The results show that iPLAN has high fidelity in producing similar layouts to those from human designers, great flexibility in accepting designer inputs and providing design suggestions accordingly, and strong generalizability when facing unseen design tasks and limited training data.

@misc{wrro185289,

month = {September},

author = {F He and Y Huang and H Wang},

note = {Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.},

booktitle = {IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2022},

title = {iPLAN: Interactive and Procedural Layout Planning},

publisher = {IEEE},

year = {2022},

journal = {2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages = {7783--7792},

keywords = {Productivity, Image synthesis, Layout, Training data, Human in the loop, Planning, Pattern recognition},

url = {https://eprints.whiterose.ac.uk/185289/},

abstract = {Layout design is ubiquitous in many applications, e.g. architecture/urban planning, etc, which involves a lengthy iterative design process. Recently, deep learning has been leveraged to automatically generate layouts via image generation, showing a huge potential to free designers from laborious routines. While automatic generation can greatly boost productivity, designer input is undoubtedly crucial. An ideal AI-aided design tool should automate repetitive routines, and meanwhile accept human guidance and provide smart/proactive suggestions. However, the capability of involving humans into the loop has been largely ignored in existing methods which are mostly end-to-end approaches. To this end, we propose a new human-in-the-loop generative model, iPLAN, which is capable of automatically generating layouts, but also interacting with designers throughout the whole procedure, enabling humans and AI to co-evolve a sketchy idea gradually into the final design. iPLAN is evaluated on diverse datasets and compared with existing methods. The results show that iPLAN has high fidelity in producing similar layouts to those from human designers, great flexibility in accepting designer inputs and providing design suggestions accordingly, and strong generalizability when facing unseen design tasks and limited training data.}

}Real-time in-between motion generation is universally required in games and highly desirable in existing animation pipelines. Its core challenge lies in the need to satisfy three critical conditions simultaneously: quality, controllability and speed, which renders any methods that need offline computation (or post-processing) or cannot incorporate (often unpredictable) user control undesirable. To this end, we propose a new real-time transition method to address the aforementioned challenges. Our approach consists of two key components: motion manifold and conditional transitioning. The former learns the important low-level motion features and their dynamics; while the latter synthesizes transitions conditioned on a target frame and the desired transition duration. We first learn a motion manifold that explicitly models the intrinsic transition stochasticity in human motions via a multi-modal mapping mechanism. Then, during generation, we design a transition model which is essentially a sampling strategy to sample from the learned manifold, based on the target frame and the aimed transition duration. We validate our method on different datasets in tasks where no post-processing or offline computation is allowed. Through exhaustive evaluation and comparison, we show that our method is able to generate high-quality motions measured under multiple metrics. Our method is also robust under various target frames (with extreme cases).

@article{wrro186288,

volume = {41},

number = {4},

month = {July},

author = {X Tang and H Wang and B Hu and X Gong and R Yi and Q Kou and X Jin},

title = {Real-time controllable motion transition for characters},

publisher = {Association for Computing Machinery (ACM)},

journal = {ACM Transactions on Graphics},

year = {2022},

keywords = {in-betweening, real-time, animation, motion manifold, deep learning, conditional transitioning, locomotion},

url = {https://eprints.whiterose.ac.uk/186288/},

abstract = {Real-time in-between motion generation is universally required in games and highly desirable in existing animation pipelines. Its core challenge lies in the need to satisfy three critical conditions simultaneously: quality, controllability and speed, which renders any methods that need offline computation (or post-processing) or cannot incorporate (often unpredictable) user control undesirable. To this end, we propose a new real-time transition method to address the aforementioned challenges. Our approach consists of two key components: motion manifold and conditional transitioning. The former learns the important low-level motion features and their dynamics; while the latter synthesizes transitions conditioned on a target frame and the desired transition duration. We first learn a motion manifold that explicitly models the intrinsic transition stochasticity in human motions via a multi-modal mapping mechanism. Then, during generation, we design a transition model which is essentially a sampling strategy to sample from the learned manifold, based on the target frame and the aimed transition duration. We validate our method on different datasets in tasks where no post-processing or offline computation is allowed. Through exhaustive evaluation and comparison, we show that our method is able to generate high-quality motions measured under multiple metrics. Our method is also robust under various target frames (with extreme cases).}

}Pleckstrin homology (PH) domains can recruit proteins to membranes by recognition of phosphatidylinositol phosphate (PIP) lipids. Several family members are linked to diseases including cancer. We report the systematic simulation of the interactions of 100 mammalian PH domains with PIP-containing membranes. The observed PIP interaction hotspots recapitulate crystallographic binding sites and reveal a number of insights: (i) The {\ensuremath{\beta}}1 and {\ensuremath{\beta}}2 strands and their connecting loop constitute the primary PIP interaction site but are typically supplemented by interactions at the {\ensuremath{\beta}}3-{\ensuremath{\beta}}4 and {\ensuremath{\beta}}5-{\ensuremath{\beta}}6 loops; (ii) we reveal exceptional cases such as the Exoc8 PH domain; (iii) PH domains adopt different membrane-bound orientations and induce clustering of anionic lipids; and (iv) beyond family-level insights, our dataset sheds new light on individual PH domains, e.g., by providing molecular detail of secondary PIP binding sites. This work provides a global view of PH domain/membrane association involving multivalent association with anionic lipids.

@article{wrro186303,

volume = {8},

number = {27},

month = {July},

author = {KIP Le Huray and H Wang and F Sobott and A Kalli},

note = {{\copyright} 2022 The Authors, some rights reserved; exclusive licensee American Association for the Advancement of Science.

This is an open access article under the terms of the Creative Commons Attribution License (CC-BY 4.0), which permits unrestricted use, distribution and reproduction in any medium, provided the original work is properly cited.},

title = {Systematic simulation of the interactions of Pleckstrin homology domains with membranes},

publisher = {American Association for the Advancement of Science},

year = {2022},

journal = {Science Advances},

url = {https://eprints.whiterose.ac.uk/186303/},

abstract = {Pleckstrin homology (PH) domains can recruit proteins to membranes by recognition of phosphatidylinositol phosphate (PIP) lipids. Several family members are linked to diseases including cancer. We report the systematic simulation of the interactions of 100 mammalian PH domains with PIP-containing membranes. The observed PIP interaction hotspots recapitulate crystallographic binding sites and reveal a number of insights: (i) The {\ensuremath{\beta}}1 and {\ensuremath{\beta}}2 strands and their connecting loop constitute the primary PIP interaction site but are typically supplemented by interactions at the {\ensuremath{\beta}}3-{\ensuremath{\beta}}4 and {\ensuremath{\beta}}5-{\ensuremath{\beta}}6 loops; (ii) we reveal exceptional cases such as the Exoc8 PH domain; (iii) PH domains adopt different membrane-bound orientations and induce clustering of anionic lipids; and (iv) beyond family-level insights, our dataset sheds new light on individual PH domains, e.g., by providing molecular detail of secondary PIP binding sites. This work provides a global view of PH domain/membrane association involving multivalent association with anionic lipids.}

}Generating new images with desired properties (e.g. new view/poses) from source images has been enthusiastically pursued recently, due to its wide range of potential applications. One way to ensure high-quality generation is to use multiple sources with complementary information such as different views of the same object. However, as source images are often misaligned due to the large disparities among the camera settings, strong assumptions have been made in the past with respect to the camera(s) or/and the object in interest, limiting the application of such techniques. Therefore, we propose a new general approach which models multiple types of variations among sources, such as view angles, poses, facial expressions, in a unified framework, so that it can be employed on datasets of vastly different nature. We verify our approach on a variety of data including humans bodies, faces, city scenes and 3D objects. Both the qualitative and quantitative results demonstrate the better performance of our method than the state of the art.

@misc{wrro182434,

volume = {36},

number = {2},

month = {June},

author = {J Lu and H Wang and T Shao and Y Yang and K Zhou},

note = {{\copyright} 2022, Association for the Advancement of Artificial Intelligence (www.aaai.org). All rights reserved. This is an author produced version of a conference paper published in Proceedings of the AAAI Conference on Artificial Intelligence. Uploaded in accordance with the publisher's self-archiving policy.},

booktitle = {36th AAAI Conference on Artificial Intelligence},

title = {Pose Guided Image Generation from Misaligned Sources via Residual Flow Based Correction},

publisher = {AAAI},

year = {2022},

journal = {Proceedings of the AAAI Conference on Artificial Intelligence},

pages = {1863--1871},

url = {https://eprints.whiterose.ac.uk/182434/},

abstract = {Generating new images with desired properties (e.g. new view/poses) from source images has been enthusiastically pursued recently, due to its wide range of potential applications. One way to ensure high-quality generation is to use multiple sources with complementary information such as different views of the same object. However, as source images are often misaligned due to the large disparities among the camera settings, strong assumptions have been made in the past with respect to the camera(s) or/and the object in interest, limiting the application of such techniques. Therefore, we propose a new general approach which models multiple types of variations among sources, such as view angles, poses, facial expressions, in a unified framework, so that it can be employed on datasets of vastly different nature. We verify our approach on a variety of data including humans bodies, faces, city scenes and 3D objects. Both the qualitative and quantitative results demonstrate the better performance of our method than the state of the art.}

}Automatic container handling plays an important role in improving the efficiency of the container terminal, promoting the globalization of container trade, and ensuring worker safety. Utilizing vision-based methods to assist container handling has recently drawn attention. However, most existing keyhole detection/localization methods still suffer from coarse keyhole boundaries. To solve this problem, we propose a real-time container hole localization algorithm based on a modified salient object segmentation network. Note that there exists no public container dataset for researchers to fairly compare their approaches, which has hindered the advances of related algorithms in this domain. Therefore, we propose the first large-scale container dataset in this work, containing 1700 container images and 4810 container hole images, for benchmarking container hole location and detection. Through extensive quantitative evaluation and computational complexity analysis, we show our method can simultaneously achieve superior results on precision and real-time performance. Especially, the detection and location precision is 100\% and 99.3\%, surpassing the state-of-the-art-work by 2\% and 62\% respectively. Further, our proposed method only consumes 70 ms (on GPU) or 1.27s (on CPU) per image. We hope the baseline approach, the first released dataset will help benchmark future work and follow-up research on automatic container handling. The dataset is available at https://github.com/qkicen/A-large-scale-container-dataset-and-a-baseline-method-for-container-hole-localization.

@article{wrro185339,

volume = {19},

number = {3},

month = {June},

author = {Y Diao and X Tang and H Wang and ECF Taylor and S Xiao and M Xie and W Cheng},

note = {{\copyright} The Author(s), under exclusive licence to Springer-Verlag GmbH Germany, part of Springer Nature 2022. This version of the article has been accepted for publication, after peer review (when applicable) and is subject to Springer Nature?s AM terms of use, but is not the Version of Record and does not reflect post-acceptance improvements, or any corrections. The Version of Record is available online at: https://doi.org/10.1007/s11554-022-01199-y. Uploaded in accordance with the publisher's self-archiving policy.},

title = {A large-scale container dataset and a baseline method for container hole localization},

publisher = {Springer},

year = {2022},

journal = {Journal of Real-Time Image Processing},

pages = {577--589},

keywords = {Container keyhole localization; Salient object segmentation; Deep learning; Container dataset},

url = {https://eprints.whiterose.ac.uk/185339/},

abstract = {Automatic container handling plays an important role in improving the efficiency of the container terminal, promoting the globalization of container trade, and ensuring worker safety. Utilizing vision-based methods to assist container handling has recently drawn attention. However, most existing keyhole detection/localization methods still suffer from coarse keyhole boundaries. To solve this problem, we propose a real-time container hole localization algorithm based on a modified salient object segmentation network. Note that there exists no public container dataset for researchers to fairly compare their approaches, which has hindered the advances of related algorithms in this domain. Therefore, we propose the first large-scale container dataset in this work, containing 1700 container images and 4810 container hole images, for benchmarking container hole location and detection. Through extensive quantitative evaluation and computational complexity analysis, we show our method can simultaneously achieve superior results on precision and real-time performance. Especially, the detection and location precision is 100\% and 99.3\%, surpassing the state-of-the-art-work by 2\% and 62\% respectively. Further, our proposed method only consumes 70 ms (on GPU) or 1.27s (on CPU) per image. We hope the baseline approach, the first released dataset will help benchmark future work and follow-up research on automatic container handling. The dataset is available at https://github.com/qkicen/A-large-scale-container-dataset-and-a-baseline-method-for-container-hole-localization.}

}Screen-space ambient occlusion (SSAO) shows high efficiency and is widely used in real-time 3D applications. However, using SSAO algorithms in stereo rendering can lead to inconsistencies due to the differences in the screen-space information captured by the left and right eye. This will affect the perception of the scene and may be a source of viewer discomfort. In this paper, we show that the raw obscurance estimation part and subsequent filtering are both sources of inconsistencies. We developed a screen-space method involving both views in conjunction, leading to a stereo-aware raw obscurance estimation method and a stereo-aware bilateral filter. The results show that our method reduces stereo inconsistencies to a level comparable to geometry-based AO solutions, while maintaining the performance benefits of a screen-space approach.

@article{wrro187713,

volume = {5},

number = {1},

month = {May},

author = {P Shi and M Billeter and E Eisemann},

note = {{\copyright} 2022 Copyright held by the owner/author(s). This is an open access article under the terms of the Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0)},

title = {Stereo-consistent screen-space ambient occlusion},

publisher = {Association for Computing Machinery (ACM)},

doi = {10.1145/3522614},

year = {2022},

journal = {Proceedings of the ACM on computer graphics and interactive techniques},

keywords = {screen-space ambient occlusion; stereo consistency; VR},

url = {https://eprints.whiterose.ac.uk/187713/},

abstract = {Screen-space ambient occlusion (SSAO) shows high efficiency and is widely used in real-time 3D applications. However, using SSAO algorithms in stereo rendering can lead to inconsistencies due to the differences in the screen-space information captured by the left and right eye. This will affect the perception of the scene and may be a source of viewer discomfort. In this paper, we show that the raw obscurance estimation part and subsequent filtering are both sources of inconsistencies. We developed a screen-space method involving both views in conjunction, leading to a stereo-aware raw obscurance estimation method and a stereo-aware bilateral filter. The results show that our method reduces stereo inconsistencies to a level comparable to geometry-based AO solutions, while maintaining the performance benefits of a screen-space approach.}

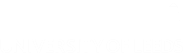

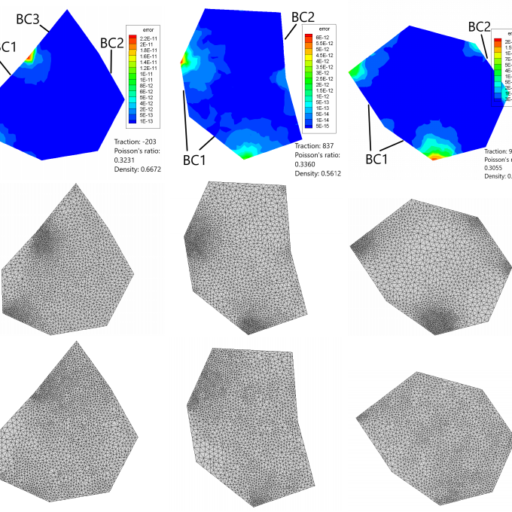

}Numerical simulations of groundwater flow are used to analyze and predict the response of an aquifer system to its change in state by approximating the solution of the fundamental groundwater physical equations. The most used and classical methodologies, such as Finite Difference (FD) and Finite Element (FE) Methods, use iterative solvers which are associated with high computational cost. This study proposes a physics-based convolutional encoder-decoder neural network as a surrogate model to quickly calculate the response of the groundwater system. Holding strong promise in cross-domain mappings, encoder-decoder networks are applicable for learning complex input-output mappings of physical systems. This manuscript presents an Attention U-Net model that attempts to capture the fundamental input-output relations of the groundwater system and generates solutions of hydraulic head in the whole domain given a set of physical parameters and boundary conditions. The model accurately predicts the steady state response of a highly heterogeneous groundwater system given the locations and piezometric head of up to 3 wells as input. The network learns to pay attention only in the relevant parts of the domain and the generated hydraulic head field corresponds to the target samples in great detail. Even relative to coarse finite difference approximations the proposed model is shown to be significantly faster than a comparative state-of-the-art numerical solver, thus providing a base for further development of the presented networks as surrogate models for groundwater prediction.

@article{wrro185207,

volume = {163},

month = {May},

author = {ML Taccari and J Nuttall and X Chen and H Wang and B Minnema and PK Jimack},

note = {{\copyright} 2022 Elsevier Ltd. All rights reserved. This is an author produced version of an article published in Advances in Water Resources. Uploaded in accordance with the publisher's self-archiving policy.},

title = {Attention U-Net as a surrogate model for groundwater prediction},

publisher = {Elsevier},

journal = {Advances in Water Resources},

year = {2022},

keywords = {Attention U-Net; groundwater flow; image-to-image regression; Surrogate modelling},

url = {https://eprints.whiterose.ac.uk/185207/},

abstract = {Numerical simulations of groundwater flow are used to analyze and predict the response of an aquifer system to its change in state by approximating the solution of the fundamental groundwater physical equations. The most used and classical methodologies, such as Finite Difference (FD) and Finite Element (FE) Methods, use iterative solvers which are associated with high computational cost. This study proposes a physics-based convolutional encoder-decoder neural network as a surrogate model to quickly calculate the response of the groundwater system. Holding strong promise in cross-domain mappings, encoder-decoder networks are applicable for learning complex input-output mappings of physical systems. This manuscript presents an Attention U-Net model that attempts to capture the fundamental input-output relations of the groundwater system and generates solutions of hydraulic head in the whole domain given a set of physical parameters and boundary conditions. The model accurately predicts the steady state response of a highly heterogeneous groundwater system given the locations and piezometric head of up to 3 wells as input. The network learns to pay attention only in the relevant parts of the domain and the generated hydraulic head field corresponds to the target samples in great detail. Even relative to coarse finite difference approximations the proposed model is shown to be significantly faster than a comparative state-of-the-art numerical solver, thus providing a base for further development of the presented networks as surrogate models for groundwater prediction.}

}Background National audits aim to reduce variations in quality by stimulating quality improvement. However, varying provider engagement with audit data means that this is not being realised. Aim The aim of the study was to develop and evaluate a quality dashboard (i.e. QualDash) to support clinical teams? and managers? use of national audit data. Design The study was a realist evaluation and biography of artefacts study. Setting The study involved five NHS acute trusts. Methods and results In phase 1, we developed a theory of national audits through interviews. Data use was supported by data access, audit staff skilled to produce data visualisations, data timeliness and quality, and the importance of perceived metrics. Data were mainly used by clinical teams. Organisational-level staff questioned the legitimacy of national audits. In phase 2, QualDash was co-designed and the QualDash theory was developed. QualDash provides interactive customisable visualisations to enable the exploration of relationships between variables. Locating QualDash on site servers gave users control of data upload frequency. In phase 3, we developed an adoption strategy through focus groups. ?Champions?, awareness-raising through e-bulletins and demonstrations, and quick reference tools were agreed. In phase 4, we tested the QualDash theory using a mixed-methods evaluation. Constraints on use were metric configurations that did not match users? expectations, affecting champions? willingness to promote QualDash, and limited computing resources. Easy customisability supported use. The greatest use was where data use was previously constrained. In these contexts, report preparation time was reduced and efforts to improve data quality were supported, although the interrupted time series analysis did not show improved data quality. Twenty-three questionnaires were returned, revealing positive perceptions of ease of use and usefulness. In phase 5, the feasibility of conducting a cluster randomised controlled trial of QualDash was assessed. Interviews were undertaken to understand how QualDash could be revised to support a region-wide Gold Command. Requirements included multiple real-time data sources and functionality to help to identify priorities. Conclusions Audits seeking to widen engagement may find the following strategies beneficial: involving a range of professional groups in choosing metrics; real-time reporting; presenting ?headline? metrics important to organisational-level staff; using routinely collected clinical data to populate data fields; and dashboards that help staff to explore and report audit data. Those designing dashboards may find it beneficial to include the following: ?at a glance? visualisation of key metrics; visualisations configured in line with existing visualisations that teams use, with clear labelling; functionality that supports the creation of reports and presentations; the ability to explore relationships between variables and drill down to look at subgroups; and low requirements for computing resources. Organisations introducing a dashboard may find the following strategies beneficial: clinical champion to promote use; testing with real data by audit staff; establishing routines for integrating use into work practices; involving audit staff in adoption activities; and allowing customisation. Limitations The COVID-19 pandemic stopped phase 4 data collection, limiting our ability to further test and refine the QualDash theory. Questionnaire results should be treated with caution because of the small, possibly biased, sample. Control sites for the interrupted time series analysis were not possible because of research and development delays. One intervention site did not submit data. Limited uptake meant that assessing the impact on more measures was not appropriate. Future work The extent to which national audit dashboards are used and the strategies national audits use to encourage uptake, a realist review of the impact of dashboards, and rigorous evaluations of the impact of dashboards and the effectiveness of adoption strategies should be explored. Study registration This study is registered as ISRCTN18289782. Funding This project was funded by the National Institute for Health and Care Research (NIHR) Health and Social Care Delivery Research programme and will be published in full in Health and Social Care Delivery Research; Vol. 10, No. 12. See the NIHR Journals Library website for further project information.

@article{wrro188565,

volume = {10},

number = {12},

month = {May},

author = {R Randell and N Alvarado and M Elshehaly and L McVey and RM West and P Doherty and D Dowding and AJ Farrin and RG Feltbower and CP Gale and J Greenhalgh and J Lake and M Mamas and R Walwyn and RA Ruddle},

note = {{\copyright} 2022 Randell et al. This is an open access article under the terms of the Creative Commons Attribution 4.0 International License (CC BY 4.0) (https://creativecommons.org/licenses/by/4.0/)},

title = {Design and evaluation of an interactive quality dashboard for national clinical audit data: a realist evaluation},

publisher = {NIHR Journals Library},

year = {2022},

journal = {Health and Social Care Delivery Research},

url = {https://eprints.whiterose.ac.uk/188565/},

abstract = {Background

National audits aim to reduce variations in quality by stimulating quality improvement. However, varying provider engagement with audit data means that this is not being realised.

Aim

The aim of the study was to develop and evaluate a quality dashboard (i.e. QualDash) to support clinical teams? and managers? use of national audit data.

Design

The study was a realist evaluation and biography of artefacts study.

Setting

The study involved five NHS acute trusts.

Methods and results

In phase 1, we developed a theory of national audits through interviews. Data use was supported by data access, audit staff skilled to produce data visualisations, data timeliness and quality, and the importance of perceived metrics. Data were mainly used by clinical teams. Organisational-level staff questioned the legitimacy of national audits. In phase 2, QualDash was co-designed and the QualDash theory was developed. QualDash provides interactive customisable visualisations to enable the exploration of relationships between variables. Locating QualDash on site servers gave users control of data upload frequency. In phase 3, we developed an adoption strategy through focus groups. ?Champions?, awareness-raising through e-bulletins and demonstrations, and quick reference tools were agreed. In phase 4, we tested the QualDash theory using a mixed-methods evaluation. Constraints on use were metric configurations that did not match users? expectations, affecting champions? willingness to promote QualDash, and limited computing resources. Easy customisability supported use. The greatest use was where data use was previously constrained. In these contexts, report preparation time was reduced and efforts to improve data quality were supported, although the interrupted time series analysis did not show improved data quality. Twenty-three questionnaires were returned, revealing positive perceptions of ease of use and usefulness. In phase 5, the feasibility of conducting a cluster randomised controlled trial of QualDash was assessed. Interviews were undertaken to understand how QualDash could be revised to support a region-wide Gold Command. Requirements included multiple real-time data sources and functionality to help to identify priorities.

Conclusions

Audits seeking to widen engagement may find the following strategies beneficial: involving a range of professional groups in choosing metrics; real-time reporting; presenting ?headline? metrics important to organisational-level staff; using routinely collected clinical data to populate data fields; and dashboards that help staff to explore and report audit data. Those designing dashboards may find it beneficial to include the following: ?at a glance? visualisation of key metrics; visualisations configured in line with existing visualisations that teams use, with clear labelling; functionality that supports the creation of reports and presentations; the ability to explore relationships between variables and drill down to look at subgroups; and low requirements for computing resources. Organisations introducing a dashboard may find the following strategies beneficial: clinical champion to promote use; testing with real data by audit staff; establishing routines for integrating use into work practices; involving audit staff in adoption activities; and allowing customisation.

Limitations

The COVID-19 pandemic stopped phase 4 data collection, limiting our ability to further test and refine the QualDash theory. Questionnaire results should be treated with caution because of the small, possibly biased, sample. Control sites for the interrupted time series analysis were not possible because of research and development delays. One intervention site did not submit data. Limited uptake meant that assessing the impact on more measures was not appropriate.

Future work

The extent to which national audit dashboards are used and the strategies national audits use to encourage uptake, a realist review of the impact of dashboards, and rigorous evaluations of the impact of dashboards and the effectiveness of adoption strategies should be explored.

Study registration

This study is registered as ISRCTN18289782.

Funding

This project was funded by the National Institute for Health and Care Research (NIHR) Health and Social Care Delivery Research programme and will be published in full in Health and Social Care Delivery Research; Vol. 10, No. 12. See the NIHR Journals Library website for further project information.}

}Differentiable physics modeling combines physics models with gradient-based learning to provide model explicability and data efficiency. It has been used to learn dynamics, solve inverse problems and facilitate design, and is at its inception of impact. Current successes have concentrated on general physics models such as rigid bodies, deformable sheets, etc, assuming relatively simple structures and forces. Their granularity is intrinsically coarse and therefore incapable of modelling complex physical phenomena. Fine-grained models are still to be developed to incorporate sophisticated material structures and force interactions with gradient-based learning. Following this motivation, we propose a new differentiable fabrics model for composite materials such as cloths, where we dive into the granularity of yarns and model individual yarn physics and yarn-to-yarn interactions. To this end, we propose several differentiable forces, whose counterparts in empirical physics are indifferentiable, to facilitate gradient-based learning. These forces, albeit applied to cloths, are ubiquitous in various physical systems. Through comprehensive evaluation and comparison, we demonstrate our model?s explicability in learning meaningful physical parameters, versatility in incorporating complex physical structures and heterogeneous materials, data-efficiency in learning, and high-fidelity in capturing subtle dynamics.

@misc{wrro184059,

booktitle = {International Conference on Learning Representation 2022},

month = {April},

title = {Fine-grained differentiable physics: a yarn-level model for fabrics},

author = {D Gong and Z Zhu and A Bulpitt and H Wang},

publisher = {OpenReview.net},

year = {2022},

journal = {International Conference on Learning Representation},

url = {https://eprints.whiterose.ac.uk/184059/},

abstract = {Differentiable physics modeling combines physics models with gradient-based

learning to provide model explicability and data efficiency. It has been used to

learn dynamics, solve inverse problems and facilitate design, and is at its inception of impact. Current successes have concentrated on general physics models

such as rigid bodies, deformable sheets, etc, assuming relatively simple structures and forces. Their granularity is intrinsically coarse and therefore incapable

of modelling complex physical phenomena. Fine-grained models are still to be

developed to incorporate sophisticated material structures and force interactions

with gradient-based learning. Following this motivation, we propose a new differentiable fabrics model for composite materials such as cloths, where we dive

into the granularity of yarns and model individual yarn physics and yarn-to-yarn

interactions. To this end, we propose several differentiable forces, whose counterparts in empirical physics are indifferentiable, to facilitate gradient-based learning.

These forces, albeit applied to cloths, are ubiquitous in various physical systems.

Through comprehensive evaluation and comparison, we demonstrate our model?s

explicability in learning meaningful physical parameters, versatility in incorporating complex physical structures and heterogeneous materials, data-efficiency

in learning, and high-fidelity in capturing subtle dynamics.}

}Image generation has been heavily investigated in computer vision, where one core research challenge is to generate images from arbitrarily complex distributions with little supervision. Generative Adversarial Networks (GANs) as an implicit approach have achieved great successes in this direction and therefore been employed widely. However, GANs are known to suffer from issues such as mode collapse, non-structured latent space, being unable to compute likelihoods, etc. In this paper, we propose a new unsupervised non-parametric method named mixture of infinite conditional GANs or MIC-GANs, to tackle several GAN issues together, aiming for image generation with parsimonious prior knowledge. Through comprehensive evaluations across different datasets, we show that MIC-GANs are effective in structuring the latent space and avoiding mode collapse, and outperform state-of-the-art methods. MICGANs are adaptive, versatile, and robust. They offer a promising solution to several well-known GAN issues. Code available:github.com/yinghdb/MICGANs.

@misc{wrro177274,

month = {February},

author = {H Ying and H Wang and T Shao and Y Yang and K Zhou},

note = {{\copyright} 2021 IEEE. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. Uploaded in accordance with the publisher's self-archiving policy.},

booktitle = {2021 IEEE/CVF International Conference on Computer Vision (ICCV 2021)},

title = {Unsupervised Image Generation with Infinite Generative Adversarial Networks},

journal = {2021 IEEE/CVF International Conference on Computer Vision (ICCV)},

pages = {14264--14273},

year = {2022},

keywords = {Image and video synthesis; Machine learning architectures and formulations},

url = {https://eprints.whiterose.ac.uk/177274/},

abstract = {Image generation has been heavily investigated in computer vision, where one core research challenge is to generate images from arbitrarily complex distributions with little supervision. Generative Adversarial Networks (GANs) as an implicit approach have achieved great successes in this direction and therefore been employed widely. However, GANs are known to suffer from issues such as mode collapse, non-structured latent space, being unable to compute likelihoods, etc. In this paper, we propose a new unsupervised non-parametric method named mixture of infinite conditional GANs or MIC-GANs, to tackle several GAN issues together, aiming for image generation with parsimonious prior knowledge. Through comprehensive evaluations across different datasets, we show that MIC-GANs are effective in structuring the latent space and avoiding mode collapse, and outperform state-of-the-art methods. MICGANs are adaptive, versatile, and robust. They offer a promising solution to several well-known GAN issues. Code available:github.com/yinghdb/MICGANs.}

}Many spatial filtering methods have been proposed to enhance the target identification performance for the steady-state visual evoked potential (SSVEP)-based brain?computer interface (BCI). The existing approaches tend to learn spatial filter parameters of a certain target using only the training data from the same stimulus, and they rarely consider the information from other stimuli or the volume conduction problem during the training process. In this article, we propose a novel multi-objective optimization-based high-pass spatial filtering method to improve the SSVEP detection accuracy and robustness. The filters are derived via maximizing the correlation between the training signal and the individual template from the same target whilst minimizing the correlation between the signal from other targets and the template. The optimization will also be subject to the constraint that the sum of filter elements is zero. The evaluation study on two self-collected SSVEP datasets (including 12 and four frequencies, respectively) shows that the proposed method outperformed the compared methods such as canonical correlation analysis (CCA), multiset CCA (MsetCCA), sum of squared correlations (SSCOR), and task-related component analysis (TRCA). The proposed method was also verified on a public 40-class SSVEP benchmark dataset recorded from 35 subjects. The experimental results have demonstrated the effectiveness of the proposed approach for enhancing the SSVEP detection performance.

@article{wrro182567,

volume = {71},

month = {January},

author = {Y Zhang and Z Li and SQ Xie and H Wang and Z Yu and Z Zhang},

note = {{\copyright} 2022 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.},

title = {Multi-Objective Optimization-Based High-Pass Spatial Filtering for SSVEP-Based Brain?Computer Interfaces},

publisher = {IEEE},

journal = {IEEE Transactions on Instrumentation and Measurement},

year = {2022},

keywords = {Brain?computer interface (BCI), electroencephalography (EEG), high-pass spatial filter, multi-objective optimization, steady-state visual evoked potential (SSVEP)},

url = {https://eprints.whiterose.ac.uk/182567/},

abstract = {Many spatial filtering methods have been proposed to enhance the target identification performance for the steady-state visual evoked potential (SSVEP)-based brain?computer interface (BCI). The existing approaches tend to learn spatial filter parameters of a certain target using only the training data from the same stimulus, and they rarely consider the information from other stimuli or the volume conduction problem during the training process. In this article, we propose a novel multi-objective optimization-based high-pass spatial filtering method to improve the SSVEP detection accuracy and robustness. The filters are derived via maximizing the correlation between the training signal and the individual template from the same target whilst minimizing the correlation between the signal from other targets and the template. The optimization will also be subject to the constraint that the sum of filter elements is zero. The evaluation study on two self-collected SSVEP datasets (including 12 and four frequencies, respectively) shows that the proposed method outperformed the compared methods such as canonical correlation analysis (CCA), multiset CCA (MsetCCA), sum of squared correlations (SSCOR), and task-related component analysis (TRCA). The proposed method was also verified on a public 40-class SSVEP benchmark dataset recorded from 35 subjects. The experimental results have demonstrated the effectiveness of the proposed approach for enhancing the SSVEP detection performance.}