Student Projects

The VCG group comprises the teaching staff for our High Performance Graphics (HPG) courses at Leeds. This course is available as a 4 year course for undergraduates or a one year for postgraduates. During the degree our students spend a substantial period of time completing an individual project - this page collects some of the best past projects.

High-Performance Graphics Group Project 2022

Below is some of the Group Projects in 2022 where the students made a game engine of their own.

Quasarts Engine

Mario S. Ivanov, Yizhou Hu, Eleanor Mills, Sven S. Buckland, Chaoshan Huang, 2022

Platinum Engine

Matthew Cumber, Zecheng Hu, Jason J. Kharmawphlang, Shihua Wu, Yichen Xiao, Jinyuan Zhu, 2022

Nox Engine

Domantas Dilys, John Barbone, Huayang Jiang, Jacob Bennett, Zhiyang Zhang, Wei Pan, Nikhil Bharadwaj, 2022

Aurora Engine

Domantas Dilys, John Barbone, Huayang Jiang, Jacob Bennett, Zhiyang Zhang, Wei Pan, Nikhil Bharadwaj, 2022

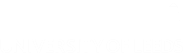

Clouds

John Barbone, 2021

To create volumetric clouds, I calculate the color of each pixel on the screen. I do this by calculating a ray from each pixel that goes through the model, and then for a given pixel marching along that pixel’s ray and adjusting the pixel’s color based on the amount of cloud encountered at each step, and the light that reaches that pixel. This project involved not only figuring out how to do the ray marching but also coming up with my own way of calculating light values based on my research into the optical properties of clouds.

Direct Volume Rendering

Domantas Dilys, 2021

Direct Volume Rendering project aims to visualise scientific datasets, without creating surfaces

from the data and treating the data points as light-emitting medium. The entire

dataset can be viewed as volumetric pixels (voxels), each having a different transparency. The implementation used with C++, OpenGL for the Graphics and Qt for the User Interface.

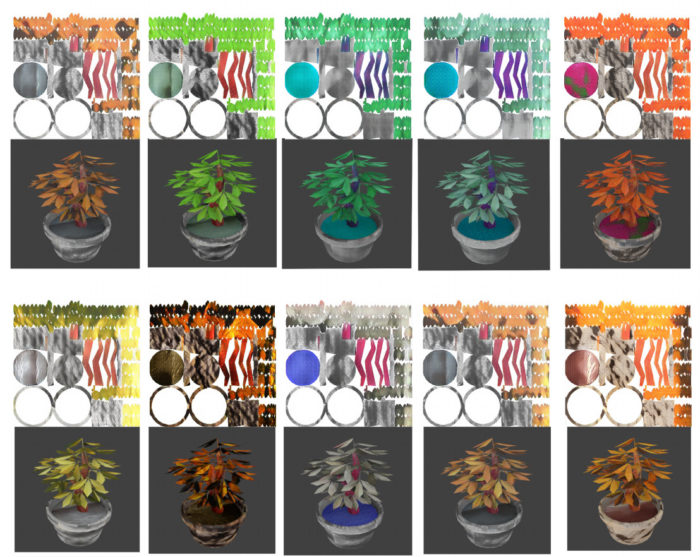

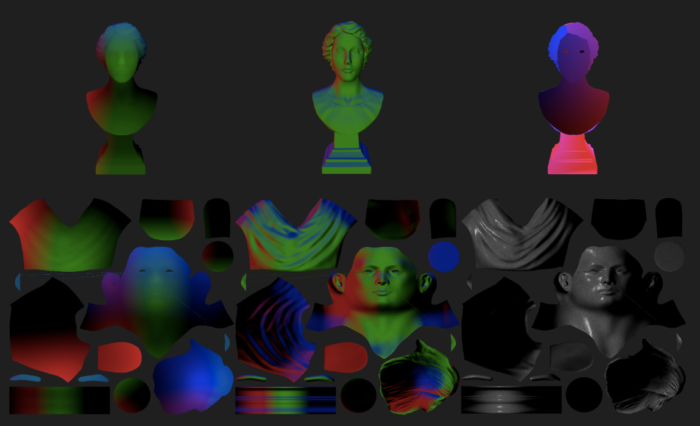

Learning to texture 3D models

Isaiah Fergile-Leybourne, 2021

This project synthesises new textures for three dimensional models. Given a set of texture classes and location data it generates novel textures using image-to-image translation systems such as Pix2Pix and BicycleGAN (code, video).

World Generation using Model Synthesis

Zecheng Hu, 2021

The application takes an example 3D model and slices the model up into little chunks, it then synthesises a novel model by joining together copies of these chunks in ways given by the example. The project is implemented in Unity.

Annual HPG Student Showcase

2021

The Raven team (repo, video) won the 1st place at the Game Republic Student Showcase in Game Technology.

Rendering of Deep Water using Spectral Domain

techniques in Real Time

Álvaro Florencio de Marcos Alés, 2020 MSc project

We apply the Inverse Fast Fourier Transformation to obtain the final ocean surface as a displacement map, this rendered with an environment-map compute shader to accelerate the accurate computation of lighting, reflections, and colour. Code on github.

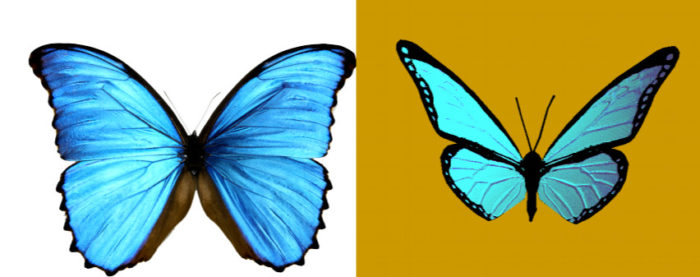

Rendering Biological Iridescence

Manigandan Rajan, 2020 MSc project

(above left, photograph of an iridescent butterfly. above right: a rendering showing the iridescence material)

Iridescence is a phenomena in which the colour of a material changes with the viewing position. Simulating such a material is time consuming, therefore this projects explains how to the implement traditional spectral rendering solutions on modern graphics hardware using Vulkan. Manigandan shared their code on github.

Multi-User VR Driving Simulator Game

Jake Meegan, 2020 MSc project

This project creates a multi-user VR driving game which contains realistic vehicle physics and a believable vehicle AI that is able to patrol around a city, simulating traffic flow. It aims to create a realistic and performant simulation which is immersive whilst staying above a frame rate of 90 Frames Per Second (FPS) that VR requires to be comfortable and reduce motion sickness. Features include realistic vehicle physics, vehicle AI and VR integration. Jake released the source code on github.

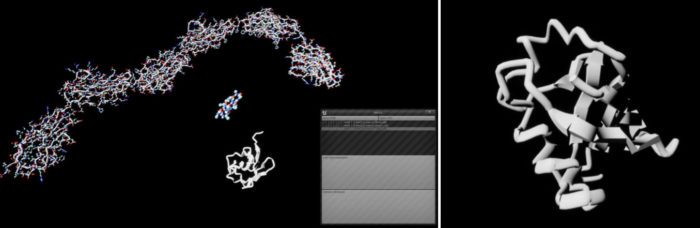

Molecular Dynamics Visualisation in VR

Govind Nangapuram Venkatesh, 2020 MSc project

In the field of Molecular Dynamics, many experiments are carried out at the molecular level to examine the interaction between atoms and molecules. Interaction simulations model complex interactions between molecules; by visualizing the results we are able to understand and interpret the interactions. This project aims to create a new type of visualiser capable of rendering these complex molecules in virtual reality, to help solve the aforementioned issues. Our system is implemented using the Unreal Engine 4, and you can find the source code on github.

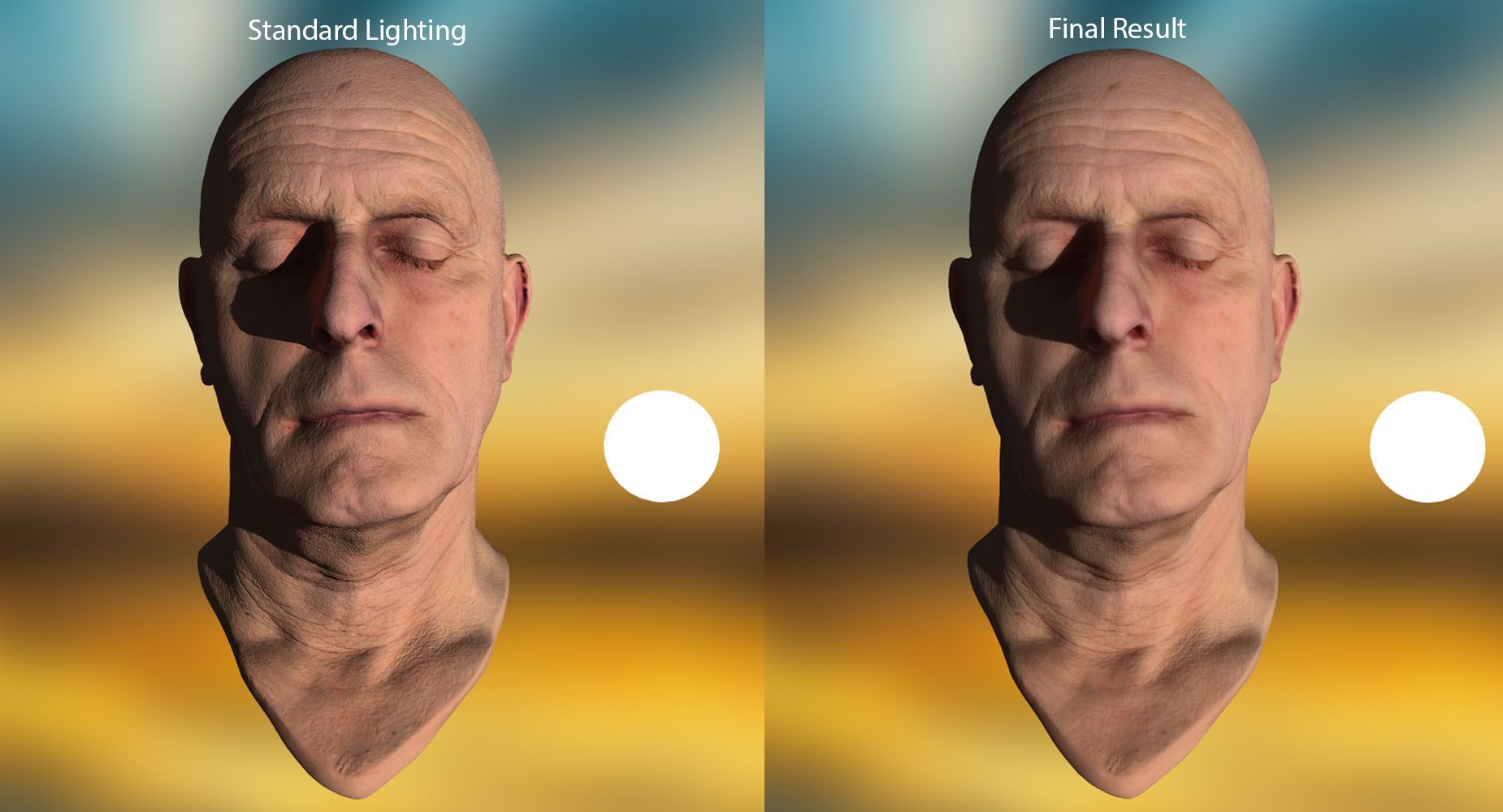

Separable Subsurface Scattering for Photo-realistic Real-Time Skin Rendering

Calum McManus, 2019 MSc project

This project involved implementing this technique using the modern Vulkan API (rather than DirectX/Direct3D) and combining it with an translucency/light transport algorithm to prove that efficient realistic run-time organic rendering is possible when using the modern tools available to game developers. The project pulls together important key elements of research from many different papers to produce the best results while keeping the solution scalable by applying it entirely in screen space. The end result runs in only 0.35 milliseconds per frame and is detailed enough to make it a perfect effect for first-person real-time scenarios. You can read more on Calum's webpage.

A Virtual Reality Driving Simulator

George Burton, 2019 MSc project

A Virtual Reality (VR) driving game was developed using Unreal Engine 4 (UE4), on top of which a crowd simulator and procedural city generation system were developed. In the project we used Wang Tiles to generate a cityscape and road system, created natural crowd movement with Karamouzas2 crowd simulation model, and added driving simulator gameplay with pedestrian collision and scoring.

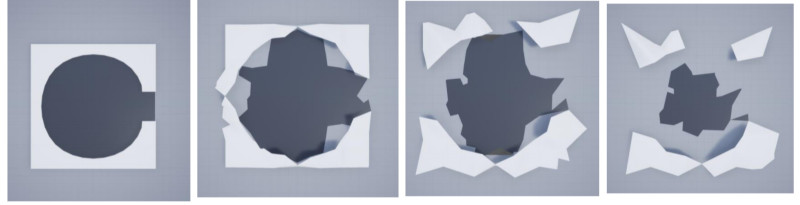

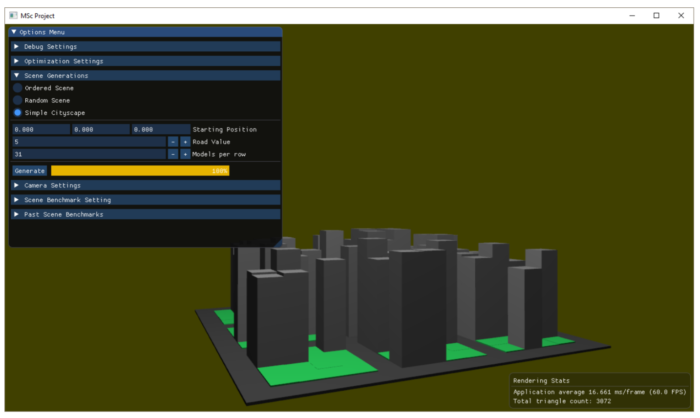

Massive Mesh Simplification

Ryan Carty, 2019 MSc project

This project takes huge environments as input (1-10 million triangles) and breaks them into

subsets for rendering. Various methods were researched for breaking a large scene into

hierarchical subsets alongside mesh simplification algorithms to reduce the triangle count of

a single mesh.

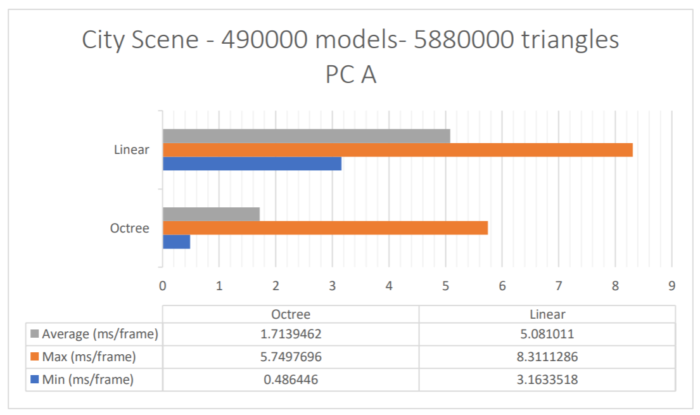

We explore the use of an octree data structure in order to speed up view frustum culling

within a three-dimensional scene. This approach greatly speeds up the time taken in

comparison to more traditional brute force methods. Through testing and evaluation of the

implemented solution were completed and potential improvements for the future are

discussed.

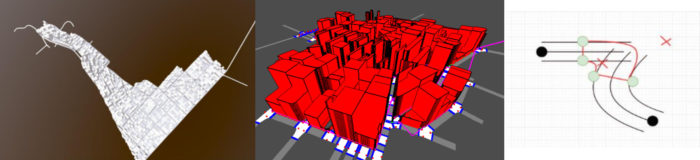

Procedural Modelling of Urban Areas

Nikolay Slavev, 2019 BSc project

This project studies the rules applicable to architectural modelling and more specifically urban areas. Every such area poses a number of challenges encompassing population density, environmental influences, building aesthetics, and street transport modalities for which adequate planning is essential and requires data. Doing all of that manually leads to error-prone, costly and time-consuming development. Our goal here is to approach the of modelling cities procedurally as well as allowing users to make adjustments to the resulting model. This attempts to combine the benefits of both manual without the problems of automatic generation. In this way the clumsy manual modelling and the risk of fully relying on automatic generation would be reduced. Procedural modeling systems benefit industries in the field of geographic design, urban planning, game development and film production.

Gatling Games Engine

Joshua Crinall, George Loines, & Michael Nisbet, 2018 Group Project

Gatling is a custom game engine for the 3rd year group project. Like the majority of coursework for the HPG course it was written in C++ for desktop computers, as well as HTC Vive virtual reality headsets. The project aimed to create a detailed game engine with many cutting edge features including physically based sky rendering, microfacet-specular volumetric fog, procedural terrain generation, tessellated water rendering and a complete resource import pipeline.

The project won first prize for game technology at the 2018 Game Republic Student Showcase and won first prize for game technology.

You can read more about the Gatling Games Engine here, and view the source code here.

Real-time Adversarial Gaming with a Robot in Virtual Reality

Brian Law, 2018 MSc project

Virtual reality (VR) combined with physical simulation is a powerful platform for the development of novel interactions between humans and robots. In this project we implement a ball throwing game with a virtual robot. The project utilises an Unreal Engine 4 instance and a URDF robot description which is connected to a HTC Vive head mounted display and handsets. This configuration allows the user's motions to be applied to a virtual character which can interact with the robot in the virtual scene directly - in this case to play catch with a virtual robot.

Preferred Shading

Ryan Needham, 2018 MSc project

Preferred Shading is a rendering architecture based on work at Pixar in the 1980s to develop a rasterisation-based alternative to recursive ray tracing for the rapid offline generation of high-fidelity computer generated images. This projects implements Preferred Shading in the Vulkan graphics API, and is able to render realistic images interactively. Shading is performed in texture space before rasterisation occurs, hence why it is also referred to as Texture Space Lighting. Shading in this way also causes the entire surface of each object to be shaded, not just the visible parts, leading it to be known by some as Object Space Lighting.

Ryan has more information on his project here, including Vulkan source code.

Real-Time Point-Based Rendering, 2018 BSc project

Stavros Diolatzis, 2018

This project explores a point-based rendering approach to real time applications, such as virtual museums and video games. In comparison to polygon based rendering (above, right), point based rendering (above, left) promises increased performance and a reduced memory footprint at high resolutions. To achieve PBR at an interactive frame, we take advantage of modern rendering techniques such as programmable shader stages and multi-pass rendering. Finally we applied the renderer to a virtual museum and a video game engine.

Virtual Reality

Philip Nilsson and Khen Cruzat, 2018 BSc project

In this third year project, we developed a game which allowed the users to experiment with river flows over a hilly landscape. By using the controllers to manipulate the water flow, as well as the immersive VR environment to explore the consequences of each action, a playful interactive experience was created. The work involved solving graph problems to predict the water's flow, as well as incorporating all the gameplay into the Unreal game engine.

Many thanks to all the students for their permission to publish their work.