A generic framework for editing and synthesizing multimodal data with relative emotion strength

Computer Animation and Virtual Worlds

J.C.P. Chan, H.P.H. Shum, H. Wang, L. Yi, W. Wei, and E.S.L. Ho

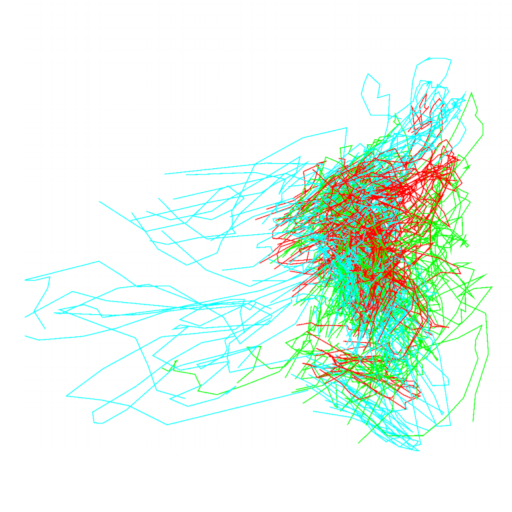

Emotion is considered to be a core element in performances. In computer animation, both body motions and facial expressions are two popular mediums for a character to express the emotion. However, there has been limited research in studying how to effectively synthesize these two types of character movements using different levels of emotion strength with intuitive control, which is difficult to be modeled effectively. In this work, we explore a common model that can be used to represent the emotion for the applications of body motions and facial expressions synthesis. Unlike previous work that encode emotions into discrete motion style descriptors, we propose a continuous control indicator called emotion strength by controlling which a data?driven approach is presented to synthesize motions with fine control over emotions. Rather than interpolating motion features to synthesize new motion as in existing work, our method explicitly learns a model mapping low?level motion features to the emotion strength. Because the motion synthesis model is learned in the training stage, the computation time required for synthesizing motions at run time is very low. We further demonstrate the generality of our proposed framework by editing 2D face images using relative emotion strength. As a result, our method can be applied to interactive applications such as computer games, image editing tools, and virtual reality applications, as well as offline applications such as animation and movie production.